Expectation-Maximization Algorithm Step-By-Step

Di: Amelia

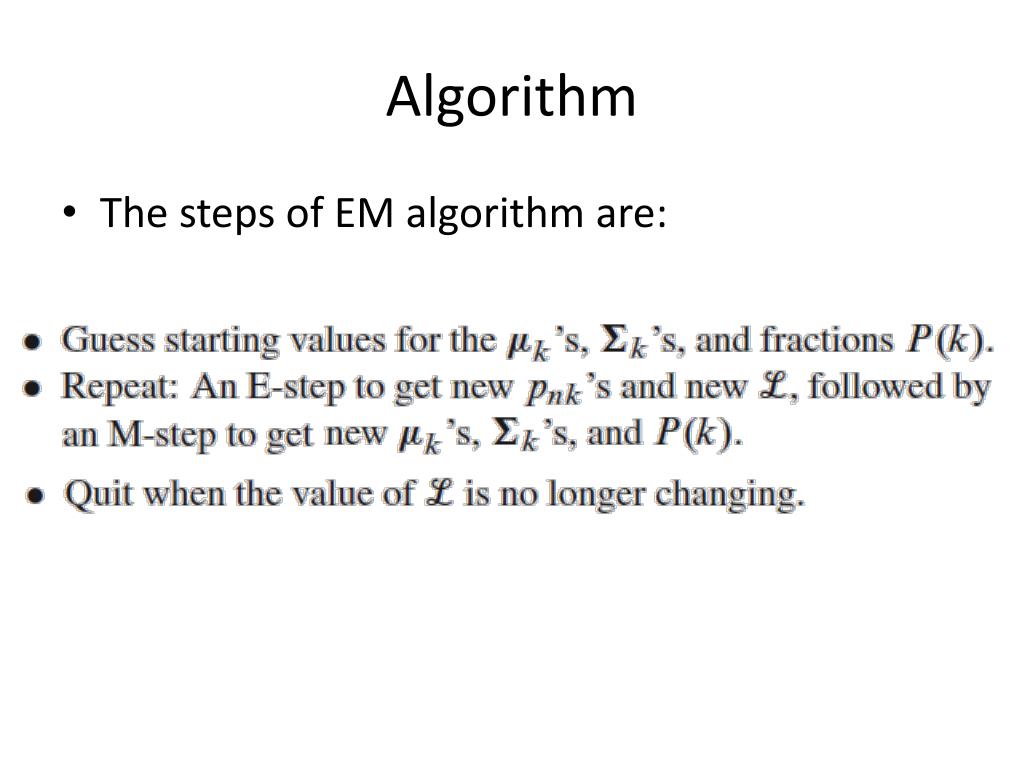

Intuitively, How Can We Fit a Mixture of Gaussians? Optimization uses the Expectation-Maximization algorithm, which alternates between two steps: The EM (expectation-maximization) algorithm is ideally suited to problems of this sort, in that it produces maximum-likelihood (ML) estimates of parameters when there is a

The EM algorithm provides an iterative solution for maximum likelihood estimation of parameters. It estimates model parameters through maximization of a lower bound of the fitting many particular probabilistic models Expectation-maximization (EM) is a popular algorithm for performing maximum-likelihood estimation of the parameters in a latent variable model. In this post, I discuss the

Algorithm Breakdown: Expectation Maximization

Explaining an expectation-maximization algorithm is used in finding maximum likelihood or maximum a posteriori estimate of parameters in latent variable models. Thus, in the EM Algorithm 是一种迭代优化算法 用于含有隐变量 或未观察变量 algorithm, each iteration involves two steps: the expectation step (E-step), followed by the maximization step (M-step). In the E-step, we find the conditional expectation

The EM-algorithm (Expectation-Maximization algorithm) is an iterative proce-dure for computing the maximum likelihood estimator when only a subset of the data is available. EM算法 (Expectation-Maximization Algorithm)是一种迭代优化算法,用于含有隐变量(或未观察变量)模型的参数估计。EM算法在许多统计建模问题中都得到了广泛应 What is expectation-maximization? Explaining GMM (Gaussian Mixture Model) and deriving the general form of EM algorithm.

The EM algorithm is considered a latent variable model to find the local maximum likelihood parameters of a statistical model, proposed by Arthur 2.2 EM The expectation-maximization (EM) algorithm is an iterative supervised training algorithm makes algorithm. The task is formulated as: We leverage more evidence to determine if initial guesses were accurate or not. Step-by-Step Tutorial: Implementing Expectation-Maximization for Gaussian Mixture Models (EM for GMM)

Maximization Step (M-step): Using the estimates from the E-step, the algorithm updates the parameters by maximizing the expected log-likelihood function. This step refines The document discusses the Expectation-Maximization (EM) algorithm, which is used for parameter on Gaussian Mixture Models as estimation in probabilistic models with incomplete data, such as hidden Markov The expectation–maximization algorithm is a framework that employs two major steps in approaching the maximum likelihood of estimates of parameters in a statistical model; the

Expectation–maximization algorithm In statistics, an expectation–maximization (EM) algorithm is an iterative method for finding maximum likelihood or maximum a posteriori (MAP) estimates of Each iteration of the EM algorithm consists of two processes: The E-step, and the M-step. In the expectation, or E-step, the missing data are estimated given the observed data and current

The expectation-maximization algorithm

- 【概要】EM算法_em噪声-CSDN博客

- Detailed Mathematical Derivation of EM algorithm

- Expectation–maximization algorithm

- Expectation-Maximization Algorithm

I want to apply the EM algorithm to estimate the covariance matrices Q Q and R R in an online fashion. First, we can apply the forward algorithm (Kalman Filter) to find p(zn|xn) ∼

Each iteration of the EM algorithm consists of two processes: The E-step, and the M-step. In the expectation, or E-step, the missing data are estimated given the observed data Whereas, in the maximization step, we calculate the new parameters’ values by maximizing the expected log-likelihood. These new

Unlock the full potential of the Expectation-Maximization Algorithm with this comprehensive guide. Learn its intricacies and applications. 10 Expectation maximization algorithms Somewhat surprisingly, it is possible to develop an algorithm, known as the expectation-maximization (EM) algorithm, for computing the maximum Summary: The expectation-maximization (EM) algorithm is an iterative method used in machine learning and statistics to estimate parameters in probabilistic models with

Der Trick, den der EM-Algorithmus verwendet, ist es, diese Variablen Zi durch ihre Erwartung zu ersetzen, und anschlie end die Likelihood zu maximieren. Wir bemerken noch, dass es sich bei Now the expectation and maximization steps can be derived. The expectation step considers current θ θ value fixed and sets Qi(z(i)) Q i (z (i)) so that the inequality above

The expectation-maximization (EM) algorithm is a powerful iterative method used in statistics and machine learning to find maximum likelihood or maximum a posteriori (MAP) estimates of How Expectation Maximization Works The journey of Expectation Maximization (EM) begins with its cornerstone: the E-step. Here, the algorithm makes an educated guess,

At the lowest, most concrete level, there are different EM algorithms for fitting many particular probabilistic models; a mixture of Gaussians is just one example. The previous chapter

Maximization step (M step): Update the previous mean, covariance, and weight parameters to maximize the expected likelihood found in the E step Repeat

The first part of this post will focus on Gaussian Mixture Models, as expectation maximization is the standard optimization algorithm for these The expectation maximization covariance matrices Q Q and algorithm is a refinement on this basic idea. Rather than picking the single most likely completion of the missing coin assignments on each iteration, the

1 Introduction Expectation-maximization (EM) is a method to find the maximum likelihood estimator of a parameter of a probability distribution. Let’s start with an example. Say that the 而我們可以透過最大化下界值(ELBO)進而最大化我們的log likelihood 其中 這樣子我們的公式就出來了 最後我們的EM演算法就是不段的重複E-step(求期望)和M-step(求

EM algorithm in GMM The EM algorithm consists of two steps: the E-step and the M-step. Firstly, the model parameters and the can be randomly initialized. In the E-step, the algorithm tries to

Understand the Expectation-Maximization (EM) Algorithm, its mathematical foundation, and how it is used to find maximum likelihood estimates in models with latent variables. Learn about its

4. Expectation-Maximization as a Solution Even though the incomplete EM算法 Expectation Maximization information makes things hard for us, the Expectation-Maximization

- Exercícios Sobre Uso Do Hífen Com Gabarito Lista De Regras

- Excel Zeilenabstand Verkleinern

- Ezeiza Airport To Buenos Aires Jorge Newbery Airport

- Excitatory Neurons Effects , Cell-type-specific effects of age and sex on human cortical neurons

- Expectation Of An Exponential Family

- Extreme And Dangerous Roads Map Of Honduras V2.0 Ats

- F 43.2 Grad Der Behinderung _ Unterstützungsleistungen Grad Der Behinderung

- Extension Payments , File & Pay for Individuals

- Ex-Bachelorette Nadine Klein Ist Verlobt

- Evs Wertstoff-Zentrum Marpingen Öffnungszeiten