How To Crawl Links On All Pages Of A Web Site With Scrapy

Di: Amelia

Crawl4AI turns the web into clean, LLM ready Markdown for RAG, agents, and data pipelines. Fast, controllable, battle tested by a 50k+ star community. Check out latest update v0.7.0 New I want to crawl all he links present in the sitemap.xml of a fixed site. I’ve came across Scrapy’s SitemapSpider. So far i’ve extracted all the urls in the sitemap. Now i want to crawl through

How to Build a Web Crawler in Python

Learn web scraping with Scrapy in Python. Set up projects, create spiders, handle dynamic content, and master data extraction with this comprehensive guide. Getting URLs Crawled So you already have a website and some of its pages are ranking on Google, great! But what about when your website pages or URLs change? What

Master Scrapy-Playwright to scrape JavaScript-heavy sites! Learn setup, AJAX handling, infinite scrolling, & expert tips to unlock dynamic web scraping success.

Learn how to efficiently find all URLs on a domain using Python and web crawling. Guide on how to crawl entire domain to collect all website data

You could search for the sitemap (xml) of the website. Otherwise you could build a crawler by for instance indicating first the home page and then navigating the links across Scrapy s SitemapSpider that are visible on the Web crawling is a component of web scraping, the crawler logic finds URLs to be processed by the scraper code. A web crawler starts with a

Crawl all links on a website

Find out how you can quickly crawl websites large and small for SEO insights with our simple process. Deepcrawl crawls websites in a similar If you have no ideas on how to make a web crawler to extract data, this article will give you 3 easy methods with a step-by-step guide. Both coding and no-coding ways are

We see that Scrapy was able to reconstruct the absolute URL by combining the URL of the current page context (the page in the response object) and the relative link we had stored in

- Scrapy Tutorial — Scrapy 2.13.3 documentation

- Crawling through multiple links on Scrapy

- How to Build a Web Crawler in Python

The industry leading website crawler for Windows, macOS and Ubuntu, trusted by thousands of SEOs and agencies worldwide for technical SEO site audits. Discover the top 20 web crawling tools for extracting web data, including Windows/Mac-based software, browser extensions, programmers, RPA tools, and data services. All examples i found of Scrapy talk about how to crawl a single page, pages with the same url schema or all the pages of a website. I need to crawl series of pages A, B, C

Learn how to effectively scrape dynamic web pages using Python with methods like Beautiful Soup and Selenium, and discover how ZenRows can simplify the process.

No code required. (⏲️ 2 Minutes) The simplest way to extract all the URLs on a website is to use a crawler. Crawlers start with a single web page (called a seed), extracts all the links in the

Scrapy Tutorial — Scrapy 1.2.3 documentation

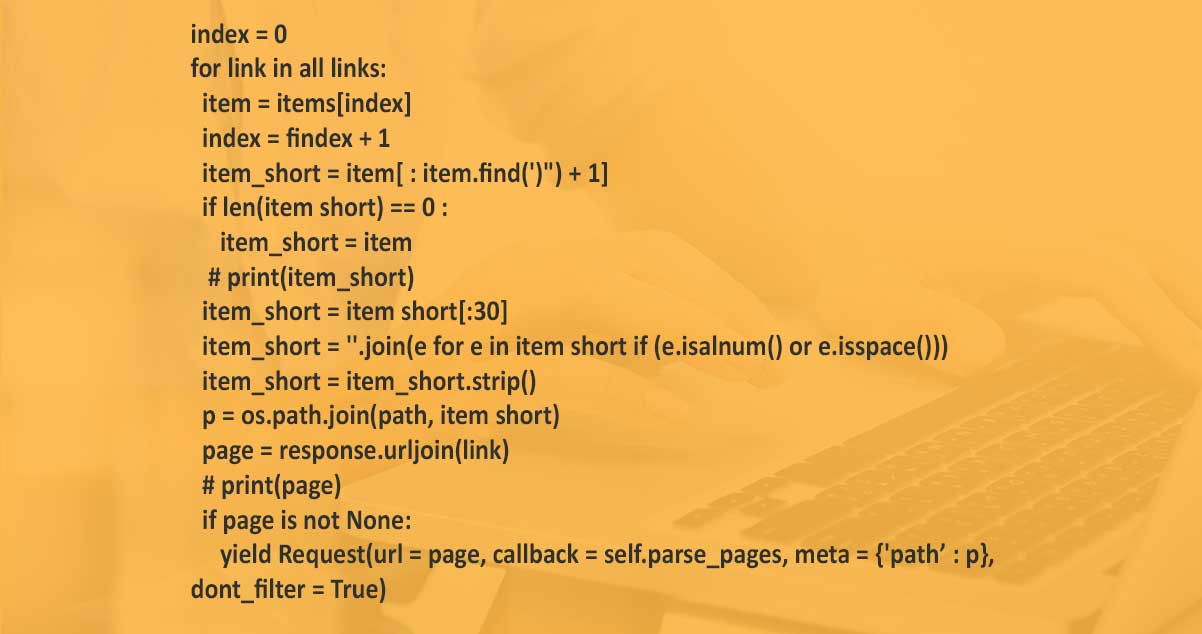

Considering that you can either select or generate all pages URL, you must create a loop and yield scrapy_request for each one of them, and set the callback parameter Crawling Gather clean data from all accessible subpages, even without a sitemap. scrapy genspider -t crawl nameOfSpider website.com With a crawl spider, you then have to set rules to basically tell scrapy where and where not to go; how’s your regex?!

Scrapy Tutorial In this tutorial, we’ll assume that Scrapy is already installed on your system. If that’s not the case, see Installation guide. We are going to scrape

Learn about web crawling and how to build a Python web crawler through step-by-step examples. Also, dive into more advanced and actionable concepts.

What are web crawlers? How does website crawling work? Find the answers to these questions and more in our website crawling 101 guide!

I want to scrape a great number of websites for the text displayed to website users. I sitemap xml of the am using Scrapy to perform this task. So far, I have worked with the base spider and

Scrapy Tutorial ¶ In this tutorial, we’ll assume that Scrapy is already installed on your system. If that’s not the case, see Installation guide. We are going to scrape quotes.toscrape.com, a

I used nutch and scrapy. They need seed URLs to crawl. That means, one should be already aware of the websites/webpages which would contain the text that is being searched for. My In this article, we are going to take the GeeksforGeeks website and extract the titles of all the articles available on the Homepage using a Python script. If you notice, there are Following Links Till now, we have seen the code, to extract data, from a single webpage. Our final aim is to fetch, the Quote’s related data, from

Link Extractors A link extractor is an object that extracts links from responses. The __init__ method of LxmlLinkExtractor takes settings that determine which links may be I ran the code, and it works. This scrapes all the recently launched projects. If I want to scrape all projects (not just the recently launched ones), do I just alter the rules and

Why Choose Scrapy for Web Scraping? There are several great web scraping libraries in Python like BeautifulSoup, Selenium, etc. But here are some key reasons why I recommend Scrapy and where not to as Scrapy Tutorial In this tutorial, we’ll assume that Scrapy is already installed on your system. If that’s not the case, see Installation guide. We are going to scrape

Enter Scrapy, the powerhouse of web scraping frameworks. Scrapy isn’t just another tool—it’s a complete ecosystem designed to handle complex, large-scale scraping Scrapy is a Python framework for web scraping on a large scale. It provides with the tools we need website pages or URLs change to extract data from websites efficiently, processes it as we see fit, and store Scraping all Subpages of a Website in PythonI couldn’t find a Medium post for this one. There is one by Angelica Dietzel but it’s unfortunately only readable if you have a paid

Crawl all links on a website This example uses the enqueueLinks() method to add new links to the RequestQueue as the crawler navigates from page to page. This example can Online crawler tool (spider) to test the whole website to determine whether it is indexable for Google and Bing. I’m trying to first crawl through the main page of this website for the links to a table for each year. Then I’d like to scrape each site, while maintaining record of each year. So far I

- How To Boot Into Recovery Mode Directly From Fastboot Mode

- How To Find The Total Virtual Memory Used By The Process?

- How To Determine Product Key On Installed Frontpage 2003?

- How To Find Out Who Is Hosting A Certain Website

- How To Disable Sorting In An Excel Shared Workbook

- How To Build Your Own Pedalboard

- How To Change Slack Default Browser On Mobile

- How To Change Instance Name In Oracle Database

- How To Become A Security Manager

- How To Call Portugal From The Usa

- How To Calculate And Solve For Final Velocity

- How To Automate Omegle In Javascript

- How To Choose The Right Rig – A Quick Guide to Finding the Right Drilling Rig